The Critical Role of Edge Computing in Autonomous Vehicle Technology

In the rapidly evolving landscape of autonomous vehicles, one technological advancement stands out as a game-changer: edge computing. This revolutionary approach to data processing is fundamentally transforming how self-driving cars operate, particularly in the crucial area of decision-making. The integration of edge computing into autonomous vehicle systems represents a paradigm shift that addresses one of the most significant challenges these vehicles face—latency.

Latency, or the delay between when data is collected and when it can be acted upon, has long been a critical bottleneck in autonomous vehicle performance. Even milliseconds of delay can mean the difference between a safe journey and a potential accident. This article explores how edge computing is revolutionizing the autonomous vehicle industry by dramatically reducing latency in decision-making processes, thereby enhancing safety, efficiency, and reliability.

Understanding Latency Challenges in Autonomous Vehicles

Before diving into the solutions offered by edge computing, it’s essential to understand the latency challenges autonomous vehicles face. Self-driving cars rely on a complex network of sensors, cameras, and radar systems to perceive their environment. These systems generate enormous volumes of data—up to 4 terabytes per hour in some advanced models. This data must be processed, analyzed, and translated into driving decisions in real-time.

Traditionally, autonomous vehicle systems have relied heavily on cloud computing for data processing. This approach involves sending data from the vehicle to remote data centers, processing it there, and then returning instructions to the vehicle. While cloud computing offers tremendous processing power and storage capabilities, it introduces significant latency issues:

- Network Transmission Delays: Data must travel from the vehicle to distant data centers and back, introducing delays that can range from tens to hundreds of milliseconds.

- Bandwidth Limitations: The massive amount of data generated by autonomous vehicles can overwhelm network capacity, causing bottlenecks and additional delays.

- Connectivity Issues: Reliance on cloud computing means that autonomous vehicles are vulnerable to network disruptions, potentially compromising safety in areas with poor connectivity.

Consider a scenario where an autonomous vehicle is traveling at 65 mph (approximately 95 feet per second). At this speed, even a 100-millisecond delay in decision-making means the vehicle travels almost 10 feet before responding to a hazard. In emergency situations, this delay could be catastrophic.

Edge Computing: The Game-Changing Solution

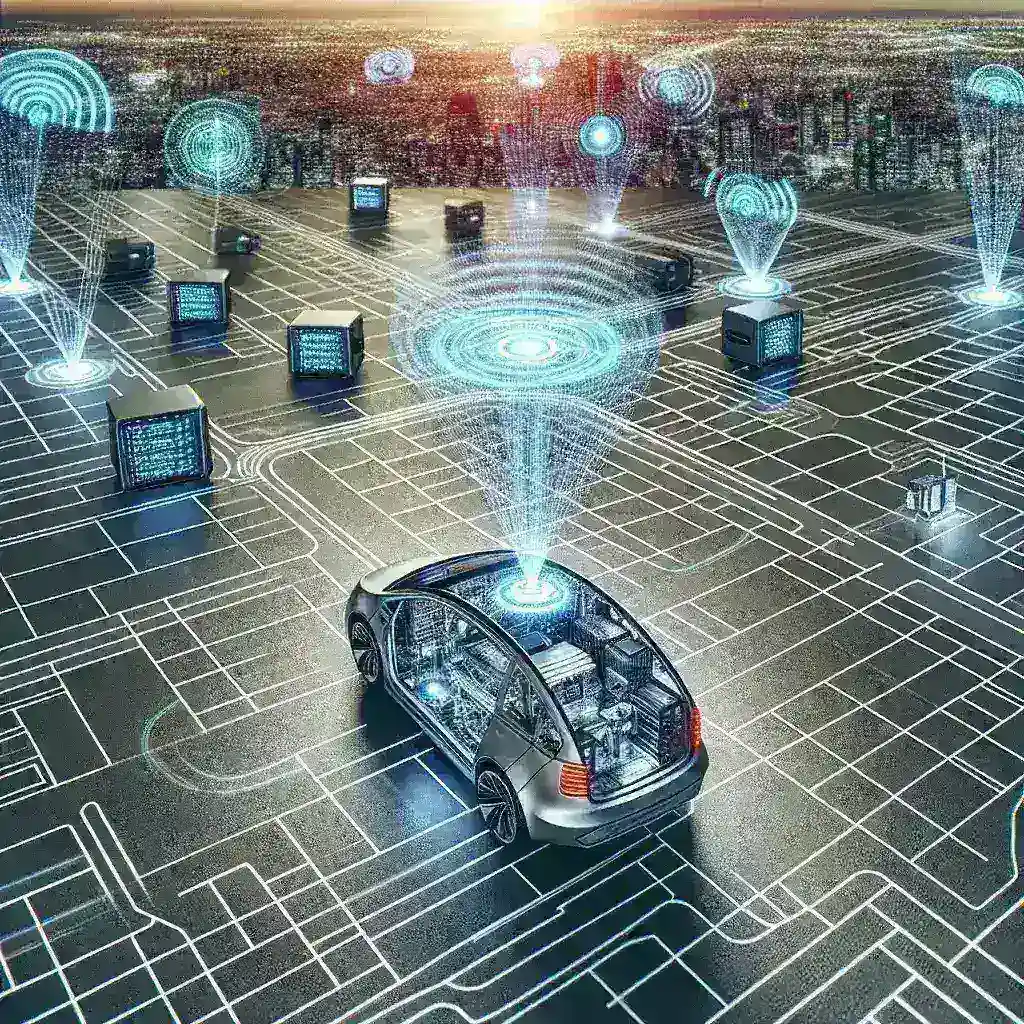

Edge computing fundamentally reimagines how data processing occurs in autonomous vehicles by bringing computation closer to the data source—the vehicle itself. Instead of relying solely on distant cloud servers, edge computing deploys powerful processing capabilities directly within or near the vehicle, enabling real-time data analysis and decision-making.

How Edge Computing Works in Autonomous Vehicles

In an edge computing architecture for autonomous vehicles, specialized hardware and software systems are installed within the vehicle or in nearby infrastructure. These systems are designed to handle the most time-sensitive processing tasks locally, while still leveraging cloud resources for less urgent functions like mapping updates or traffic pattern analysis.

The edge computing workflow in autonomous vehicles typically follows these steps:

- Sensor data is collected from cameras, LiDAR, radar, and other perception systems.

- This data is immediately processed by onboard edge computing systems.

- Critical driving decisions are made locally, without waiting for cloud communication.

- Less time-sensitive data may still be sent to the cloud for deeper analysis and to contribute to fleet-wide learning.

- The vehicle continues to operate safely even if cloud connectivity is temporarily lost.

The Tangible Benefits of Reduced Latency

The shift to edge computing delivers several transformative benefits for autonomous vehicle operations:

1. Near-Instantaneous Decision-Making

By processing data locally, edge computing reduces decision-making latency from hundreds of milliseconds to just a few milliseconds. This near-instantaneous response time enables autonomous vehicles to react to unexpected obstacles, pedestrians, or changing traffic conditions with human-like or even superior reflexes.

Research conducted by the Massachusetts Institute of Technology (MIT) has demonstrated that edge computing can reduce response times in autonomous vehicles by up to 80% compared to cloud-dependent systems. This improvement is particularly crucial for emergency braking scenarios, where every millisecond counts.

2. Enhanced Safety and Reliability

With reduced latency comes significantly improved safety. Edge computing enables autonomous vehicles to make split-second decisions based on real-time data, substantially reducing the risk of accidents. Moreover, by reducing dependence on external networks, edge computing creates more resilient autonomous systems that can maintain safe operation even when connectivity is compromised.

A 2022 study published in the IEEE Transactions on Intelligent Transportation Systems found that autonomous vehicles equipped with edge computing capabilities demonstrated a 63% improvement in hazard response times compared to cloud-only systems, potentially preventing numerous accidents in real-world testing scenarios.

3. Improved Passenger Experience

Beyond safety, reduced latency contributes to a smoother, more comfortable passenger experience. Edge computing enables more natural vehicle behavior with smoother acceleration, deceleration, and turning. These improvements help reduce motion sickness—a common concern in autonomous vehicles—and build passenger trust in the technology.

4. Bandwidth Optimization

By processing most data locally and sending only selected information to the cloud, edge computing dramatically reduces the bandwidth requirements of autonomous vehicles. This not only reduces operational costs but also makes autonomous vehicle deployment more feasible in areas with limited network infrastructure.

Edge Computing Architectures for Autonomous Vehicles

The implementation of edge computing in autonomous vehicles takes several forms, each with unique advantages for specific use cases:

In-Vehicle Edge Computing

The most direct application of edge computing in autonomous vehicles involves powerful onboard computers that process sensor data in real-time. These systems typically feature specialized hardware such as Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs), or Application-Specific Integrated Circuits (ASICs) designed specifically for AI and machine learning workloads.

Modern autonomous vehicles often contain multiple edge computing nodes, each dedicated to specific functions:

- Perception Systems: Process camera, LiDAR, and radar data to identify objects and map the surrounding environment.

- Decision Systems: Analyze the processed environmental data to determine appropriate driving actions.

- Control Systems: Translate decisions into precise commands for the vehicle’s mechanical components.

These in-vehicle edge computing systems can handle up to 90% of all data processing needs locally, dramatically reducing dependence on external networks and virtually eliminating latency for critical driving decisions.

Roadside Edge Computing Infrastructure

Complementing in-vehicle systems, roadside edge computing infrastructure provides additional processing power and facilitates vehicle-to-infrastructure (V2I) communication. This approach involves deploying edge servers at strategic locations such as traffic signals, highway on-ramps, and urban intersections.

Roadside edge computing offers several advantages:

- Extended Perception: Provides vehicles with information beyond their direct line of sight, such as traffic conditions around corners or approaching emergency vehicles.

- Computational Offloading: Allows vehicles to temporarily offload complex processing tasks to nearby infrastructure, conserving onboard resources.

- Collective Intelligence: Enables multiple vehicles to share processed data through the roadside infrastructure, creating a more comprehensive understanding of traffic conditions.

Cities like Singapore and Pittsburgh have begun implementing roadside edge computing networks specifically designed to support autonomous vehicle testing and deployment. These networks have demonstrated latency reductions of up to 75% compared to traditional cloud-based approaches.

Hybrid Edge-Cloud Architectures

While edge computing excels at reducing latency for time-critical functions, cloud computing still offers advantages for certain aspects of autonomous vehicle operation. The most effective architectures employ a hybrid approach that leverages both paradigms:

- Edge Computing: Handles real-time perception, decision-making, and vehicle control.

- Cloud Computing: Manages functions like high-definition mapping, traffic pattern analysis, software updates, and fleet-wide learning.

This balanced approach ensures that autonomous vehicles benefit from both the low latency of edge computing and the vast computational resources of cloud infrastructure.

Technical Innovations Enabling Edge Computing in Autonomous Vehicles

The integration of edge computing into autonomous vehicles has been made possible by several key technological innovations:

Advanced Hardware Accelerators

Traditional central processing units (CPUs) lack the specialized architecture needed for the AI workloads that power autonomous driving. The industry has responded with custom hardware accelerators designed specifically for these applications:

- Neural Processing Units (NPUs): Specialized processors optimized for neural network operations, offering 10-50x performance improvement over general-purpose CPUs for AI workloads.

- Tensor Processing Units (TPUs): Custom-designed AI accelerators that excel at the matrix operations common in machine learning algorithms.

- Automotive-Grade GPUs: Hardened versions of graphics processing units designed to withstand the rigors of automotive environments while delivering massive parallel processing capability.

Companies like NVIDIA, Intel’s Mobileye, and Tesla have developed custom silicon specifically for autonomous vehicle edge computing, with each generation delivering exponential improvements in performance per watt—a critical metric for vehicle-based systems with limited power budgets.

Edge-Optimized Machine Learning Models

Beyond hardware advances, significant progress has been made in developing machine learning models that can run efficiently at the edge. Techniques like model compression, quantization, and knowledge distillation have enabled complex AI algorithms to operate within the constraints of vehicular edge computing systems:

- Model Compression: Reduces the size of neural networks by 10-100x while maintaining comparable accuracy.

- Quantization: Decreases computational requirements by using lower-precision calculations (8-bit or even 4-bit instead of 32-bit floating-point).

- Federated Learning: Enables models to improve based on distributed data without centralizing sensitive information.

These optimizations allow autonomous vehicles to run sophisticated AI algorithms locally, making split-second decisions without cloud dependency.

5G and Advanced Networking

While edge computing reduces the need for constant high-bandwidth communication, connectivity still plays an important role in hybrid edge-cloud architectures. The rollout of 5G networks provides several advantages for autonomous vehicle edge computing:

- Network Slicing: Dedicates portions of the network specifically for autonomous vehicle communications, ensuring reliable performance.

- Multi-access Edge Computing (MEC): Integrates computing resources directly into the 5G network infrastructure, creating an additional layer of edge computing capability.

- Ultra-Reliable Low-Latency Communication (URLLC): A 5G capability that guarantees sub-millisecond latency for critical communications.

These networking advances complement in-vehicle edge computing by providing reliable connectivity when cloud resources are needed, while still maintaining low latency.

Real-World Applications and Case Studies

The theoretical benefits of edge computing for autonomous vehicles are compelling, but how is this technology performing in real-world applications? Several pioneering projects illustrate the transformative impact of edge computing on autonomous vehicle latency:

Waymo’s Fifth-Generation Self-Driving System

Waymo, a leader in autonomous vehicle technology, has incorporated advanced edge computing capabilities into its fifth-generation self-driving system. This system features custom-designed compute platforms that process sensor data onboard the vehicle with minimal latency.

In urban testing environments, Waymo’s edge-powered vehicles have demonstrated the ability to detect and respond to unexpected obstacles in as little as 0.1 seconds—comparable to human reaction times. This performance represents a 70% improvement over earlier generations that relied more heavily on cloud processing.

Particularly impressive is the system’s ability to maintain this performance level even in areas with poor network connectivity, such as underground parking structures or remote highways, highlighting the reliability advantages of edge computing.

Tesla’s Full Self-Driving Computer

Tesla has taken a particularly aggressive approach to edge computing with its Full Self-Driving (FSD) computer. Rather than relying on cloud resources, Tesla designed a custom edge computing solution featuring:

- Two AI accelerator chips developed in-house

- A combined 144 TOPS (trillion operations per second) of processing power

- Redundant design for safety-critical operations

This powerful edge computing platform enables Tesla vehicles to process over 2,300 frames per second from multiple cameras and sensors, making driving decisions with latency measured in milliseconds. The company reports that its neural networks can run up to 21 times faster on this dedicated hardware compared to previous GPU-based solutions.

Tesla’s approach demonstrates how purpose-built edge computing hardware can dramatically reduce latency in autonomous driving applications, enabling more responsive and safer vehicle behavior.

Autonomous Truck Platooning

Edge computing has proven particularly valuable for autonomous truck platooning, where multiple trucks travel in close formation to reduce aerodynamic drag and improve fuel efficiency. This application requires extremely low-latency communication between vehicles to maintain safe following distances.

A European research consortium demonstrated a truck platooning system using vehicle-to-vehicle edge computing that achieved end-to-end latency of less than 5 milliseconds for critical safety communications. This ultra-low latency enabled trucks to maintain following distances as close as 10 meters while traveling at highway speeds—something impossible with cloud-dependent systems.

The project estimated fuel savings of 10-15% while maintaining or improving safety compared to human-driven trucks, illustrating how edge computing’s latency benefits translate directly to economic and environmental advantages.

Challenges and Limitations

Despite its transformative potential, implementing edge computing in autonomous vehicles presents several significant challenges:

Hardware Constraints

Autonomous vehicles operate under strict constraints related to power consumption, heat generation, and physical space. Edge computing hardware must deliver massive computational power while adhering to these limitations:

- Power Efficiency: Especially critical for electric vehicles, where computing workloads directly impact driving range.

- Thermal Management: High-performance computing generates considerable heat, requiring sophisticated cooling solutions compatible with automotive environments.

- Form Factor: Edge computing systems must fit within the limited space available in vehicle designs without compromising passenger or cargo capacity.

Addressing these constraints requires specialized hardware design approaches and often involves tradeoffs between computing power, energy consumption, and cost.

Software Complexity

Developing software for edge computing in autonomous vehicles introduces unique challenges:

- Real-Time Requirements: Software must meet strict timing guarantees with deterministic performance.

- Fault Tolerance: Systems must gracefully handle hardware failures without compromising safety.

- Update Mechanisms: Edge software requires secure, reliable update methods that don’t interrupt vehicle operation.

The industry is addressing these challenges through specialized automotive software platforms and development methodologies focused on safety-critical systems.

Security Concerns

Edge computing introduces new security considerations for autonomous vehicles:

- Attack Surface: Distributed computing increases the number of potential entry points for cyberattacks.

- Physical Access: Unlike cloud servers in secure data centers, edge computing hardware in vehicles may be physically accessible to attackers.

- Privacy Implications: Local processing of sensitive sensor data raises questions about data ownership and privacy.

Addressing these concerns requires a multi-layered security approach incorporating hardware security modules, secure boot processes, encrypted communications, and regular security updates.

Future Directions and Emerging Trends

As edge computing continues to evolve, several emerging trends promise to further reduce latency and enhance autonomous vehicle capabilities:

Neuromorphic Computing

Inspired by the structure and function of the human brain, neuromorphic computing represents a radical departure from conventional computing architectures. These systems use artificial neurons and synapses implemented in hardware to process information in a brain-like manner.

For autonomous vehicles, neuromorphic edge computing offers several advantages:

- Ultra-Low Power Consumption: Neuromorphic chips can be 100-1000x more energy-efficient than conventional processors for certain AI workloads.

- Event-Based Processing: Rather than analyzing every frame from a camera, neuromorphic systems can process only the pixels that change, dramatically reducing computational requirements.

- Natural Handling of Temporal Data: Better processing of time-series data from vehicle sensors, improving motion prediction.

Companies like Intel (with its Loihi chip) and BrainChip are developing neuromorphic solutions specifically targeting autonomous vehicle applications, with early results suggesting latency improvements of up to 95% for certain perception tasks.

Collaborative Edge Computing

The future of autonomous vehicle edge computing likely involves greater collaboration between vehicles and infrastructure:

- Swarm Intelligence: Multiple vehicles sharing processed sensor data to create a collective understanding of complex traffic scenarios.

- Dynamic Resource Sharing: Vehicles with excess computing capacity temporarily assisting others with particularly demanding workloads.

- Distributed AI Training: Using the collective processing power of multiple vehicles to improve AI models while preserving privacy.

These collaborative approaches could further reduce effective latency by extending a vehicle’s perception beyond its own sensors, providing advance warning of conditions beyond line-of-sight.

Quantum Edge Computing

While still largely theoretical for vehicle applications, quantum computing could eventually play a role in edge computing for autonomous vehicles. Quantum approaches show particular promise for specific problems relevant to autonomous driving:

- Route Optimization: Finding optimal paths through complex transportation networks.

- Sensor Fusion: Integrating data from multiple sensors with mathematical approaches that benefit from quantum speedups.

- Traffic Flow Prediction: Modeling complex traffic systems more accurately than possible with classical computing.

Though practical quantum edge computing for vehicles remains years away, early research suggests it could eventually reduce latency for certain specialized tasks by orders of magnitude compared to classical approaches.

Economic and Social Implications

The reduced latency enabled by edge computing in autonomous vehicles extends beyond technical benefits to create significant economic and social impacts:

Safety and Public Health

The most immediate benefit of reduced decision-making latency is improved safety. Studies suggest that widespread adoption of autonomous vehicles with edge computing capabilities could reduce traffic fatalities by up to 90%—potentially saving over 30,000 lives annually in the United States alone.

Beyond reducing accidents, the improved safety enables new mobility options for vulnerable populations, including the elderly and those with disabilities, enhancing their independence and quality of life.

Economic Productivity

Low-latency autonomous vehicles powered by edge computing could dramatically improve economic productivity through:

- Reduced Congestion: More efficient traffic flow could save billions in lost productivity annually.

- Logistics Optimization: Autonomous trucking with minimal latency enables more efficient supply chains.

- New Business Models: Enabling innovative transportation services not possible with human drivers or high-latency autonomous systems.

Economists at the Brookings Institution estimate that fully realized autonomous vehicle technology could contribute up to $800 billion annually to the US economy by 2050, with edge computing playing a crucial role in unlocking this potential.

Environmental Impact

The precision enabled by low-latency edge computing can significantly reduce the environmental impact of transportation:

- Fuel Efficiency: Smoother driving patterns reduce fuel consumption by 15-20%.

- Vehicle Utilization: Higher utilization rates for shared autonomous vehicles reduce the total number of cars needed.

- Traffic Flow: Reduced congestion and more efficient routing lower emissions across the transportation system.

These environmental benefits could play a significant role in meeting climate goals while maintaining or improving mobility.

Conclusion: The Transformative Potential of Edge Computing for Autonomous Vehicles

Edge computing represents a fundamental shift in how autonomous vehicles process information and make decisions. By bringing computation closer to the data source—the vehicle itself—edge computing dramatically reduces latency, enabling near-instantaneous responses to changing road conditions.

The benefits of this reduced latency cascade through all aspects of autonomous vehicle operation:

- Enhanced Safety: Faster reaction times mean fewer accidents and saved lives.

- Improved Reliability: Reduced dependence on network connectivity creates more robust autonomous systems.

- Better Passenger Experience: Smoother, more natural vehicle behavior builds trust and comfort.

- Economic Advantages: More efficient operation reduces costs and enables new business models.

- Environmental Benefits: Precise control reduces energy consumption and emissions.

As edge computing technology continues to advance, we can expect even further reductions in latency and improvements in autonomous vehicle capabilities. The integration of neuromorphic computing, collaborative approaches, and eventually quantum methods promises to push the boundaries of what’s possible in autonomous transportation.

The journey toward fully autonomous vehicles is complex, involving technological, regulatory, and social challenges. However, edge computing’s ability to dramatically reduce latency in decision-making represents a critical milestone on this journey—one that brings us significantly closer to a future of safer, more efficient, and more accessible transportation for all.