Understanding AI Bias in Modern Technology

In recent years, Artificial Intelligence (AI) has revolutionized various industries, from healthcare to transportation. However, as these technologies become more integrated into daily life, concerns about AI bias have surfaced. Studies indicate that biases in AI algorithms can lead to significant errors, particularly in systems like self-driving cars, image generators, and facial recognition technology. This article delves into these critical issues, illustrating the challenges posed by AI bias and its implications for society.

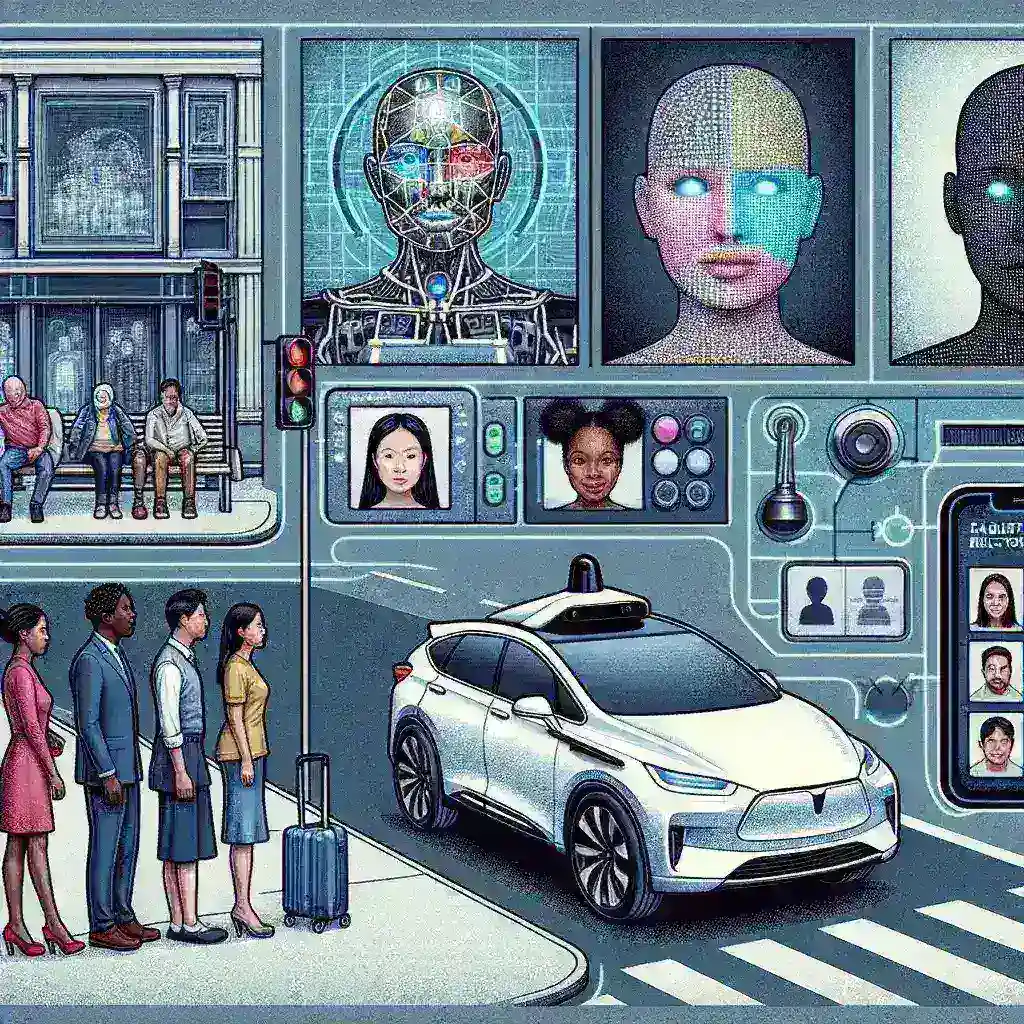

Self-Driving Cars and Pedestrian Detection

Self-driving cars are heralded as the future of transportation, promising to enhance safety and efficiency. Yet, research reveals alarming biases in pedestrian detection algorithms. Self-driving cars rely heavily on computer vision to identify pedestrians, cyclists, and other objects on the road. However, studies have shown that these systems often struggle to accurately recognize individuals from diverse racial and ethnic backgrounds.

Statistics on Pedestrian Detection Errors

- A study conducted by MIT found that facial recognition software misidentified darker-skinned individuals 34% of the time, compared to just 1% for lighter-skinned individuals.

- Research from Stanford University highlights that self-driving cars have a higher failure rate in detecting pedestrians who are non-white, leading to an increased risk of accidents.

Real-World Implications

The implications of biased pedestrian detection systems are profound. If self-driving cars cannot accurately identify pedestrians, the risk of accidents increases, posing a significant threat to public safety. Moreover, these biases could lead to legal ramifications for companies deploying such technologies.

Image Generators and Default Biases

Image generators powered by AI, such as those used in design and art creation, also face challenges related to bias. These systems often learn from vast datasets, which may inadvertently reflect societal biases. For instance, image generators tend to create images that align with dominant cultural representations, sidelining diverse perspectives.

Examples of Image Generator Defaults

- Many AI image generators default to producing visuals based on Western beauty standards, often excluding or misrepresenting individuals from different cultural backgrounds.

- Studies have shown that AI-generated images frequently depict gender stereotypes, reinforcing outdated societal norms.

Cultural Relevance and Representation

The lack of cultural sensitivity in AI-generated images can lead to a homogenized view of creativity and expression, stifling innovation and diversity in artistic fields. This raises questions about the responsibility of developers to create more inclusive algorithms that represent the full spectrum of human experience.

Facial Recognition Errors and Privacy Concerns

Facial recognition technology has seen rapid advancements, yet it is fraught with issues surrounding accuracy and privacy. Studies indicate that this technology is more prone to errors in identifying women and people of color, raising ethical concerns about surveillance and profiling.

Expert Insights

Experts argue that the deployment of biased facial recognition systems can lead to severe consequences, such as wrongful arrests and increased racial profiling. For example, a report from the American Civil Liberties Union (ACLU) highlighted that facial recognition software misidentified individuals in a significant number of cases, particularly among minority groups.

Steps Toward Improved Accuracy

To mitigate these biases, developers and researchers must prioritize diversity in training datasets and actively work to identify and correct biases in algorithms. Implementing rigorous testing protocols and transparency in AI models can enhance public trust and ensure these technologies are fair and equitable.

Future Predictions and Solutions

As AI technologies continue to evolve, the conversation around bias must remain at the forefront. Researchers predict that tackling AI bias will become increasingly crucial as reliance on these systems grows. Potential solutions include:

- Incorporating diverse datasets during the training phase to better reflect the population.

- Establishing regulatory frameworks to oversee AI development and deployment, ensuring accountability.

- Engaging with communities to understand their needs and perspectives, fostering collaboration between developers and users.

Conclusion

Addressing AI bias is a complex but essential endeavor. From self-driving cars to image generators and facial recognition systems, the consequences of unchecked bias can be detrimental to individuals and society at large. As we navigate this technological landscape, it is imperative that we prioritize fairness, representation, and ethics in the development of AI solutions.